TIPS & TRICKS

The ultimate guide to using Bing Webmaster Tools – Part 2

Bing Webmaster Tools (BWT) has a lot to offer. Are you ready to dig in to learn more? In this section, I’m going to break down the first four of 10 sections and include an overview of the tools in each.

Bing Webmaster Tools (BWT) has a lot to offer. Are you ready to dig in to learn more? In this section, I’m going to break down the first four of 10 sections and include an overview of the tools in each.

The four sections we will cover here are:

- My Sites.

- Dashboards.

- Configure My Site.

- Reports & Data.

Once you have verified site ownership with BWT, you will be able to start making use of all of the free tools and data in each section. Let’s dig in!

Section: My Sites

My Sites allows you to view the sites you have access to and add additional sites to be managed.

It provides you with a quick thumbnail of your website, the number of messages in your Message Center inbox and the percentage change for clicks from search, search impressions, pages crawled and pages indexed. From MySites, you can quickly navigate to the dashboard for a site by clicking on it in the table.

Section: Dashboards

The Site Dashboard is an easy-to-read snapshot of:

- Your site’s overall activity.

- The list of sitemaps that have been submitted, indexed and crawled.

- Your site’s top organic search queries.

- Your site’s inbound links.

To get a more comprehensive and detailed report, you can either click on the link below the snapshot or use the corresponding navigation in the menu on the left-hand side of the toolset.

Section: Configure My Site

In my opinion, the options available within Configure My Site navigation are the most important features within Bing Webmaster Tools (BWT).

This is where you provide input directly to Bing on your basic website configuration so it can crawl, access and index your website.

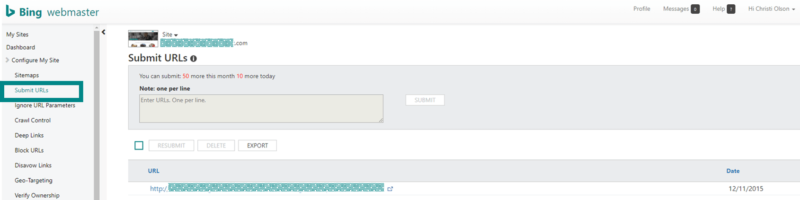

Submitting Sitemaps and URLs allows you to tell Bing about the structure of your website and make sure Bing is aware of pages you want to be included in the index. In the Submit a URL section. you can add URLs of pages directly into the Bing index. There is a limit of 10 links per day and 50 per month with this feature. This tool is also publicly accessible.

In the Sitemaps section, you can submit, delete or export sitemaps, as well as view the status and last crawl date for the sitemap. You do not have to resubmit a sitemap every time you change it. Bing automatically checks for sitemap updates on a regular basis. However, if you’ve made changes to your sitemap and Bing has not recently crawled your sitemap, you can resubmit it by clicking the “Resubmit” button.

Here are some things you should be looking for:

- Check the status column. Are there any failed items? If yes, you’ll want to investigate why it failed. It could be an issue with the structure of the sitemap, or Bingbot might be blocked from accessing the sitemap.

- Check to see if the number of URLs submitted mirrors the number of URLs in your site. If the number is significantly higher or lower, it signals that you need to investigate.

- Check the last crawl date. If you’ve made significant updates to your sitemap and it hasn’t been crawled recently, you can resubmit it.

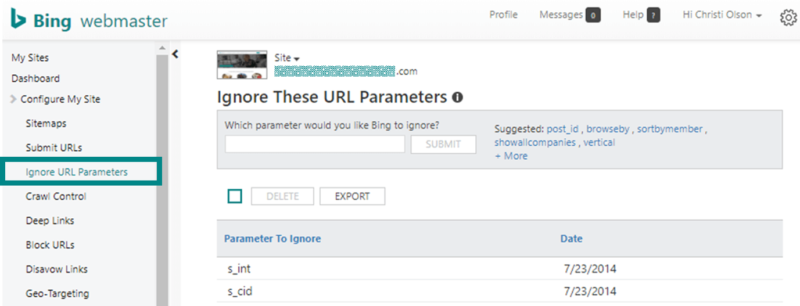

Ignore URL Parameters

The Ignore URL Parameters feature tells Bing which uniform resource locator (URL) parameters can be safely ignored because they don’t alter content on the page or website. Sometimes the URL parameters that appear after the “a?” mark in the query string can result in multiple variations of the same URL that point to the same content.

The Ignore URL Parameters feature helps to normalize the multiple versions so that only one version of the page is indexed.

What you should be looking for:

- Ignore campaign parameters or variables that do not alter the content of a page like session IDs.

- If you have a web page that uses a dynamic URL for a component like a product ID (&ProductID=12345) or article ID (&ArticleID=12345) that changes the content on the page, you should NOT add that parameter to the list.

Crawl Control

The Crawl Control feature gives you the option to tell Bingbot to crawl your site at a faster or slower rate by the hour.

At SMX Advanced in June, Frederic Dubut spoke about the Bing Crawler and how to utilize the webmaster tools Crawl Control feature. In his presentation, he covered how you can utilize the control features to adjust your crawl budget, which is how much the crawler thinks it can crawl your site without impacting performance.

How you should be using this feature:

You can either use the custom crawl patterns that provide you with preset schedules or you can manually set your own custom crawl pattern based on your specific hourly breakdown of traffic. For example, the 9 a.m.-5 p.m. preset crawl schedule will slow-crawl rates during the business day and will crawl faster during the late-night and early morning hours.

Blocking deep links or URLs

The individual features for Blocking Deep Links and Blocking URLs allow you to block either a specific URL from appearing in the search engine result pages (SERPs) or deep links from appearing as additional linked content.

Deep Links are the organic search equivalent to site links in paid search. They are the links which appear below top-ranked search results, linking to different pages to allow more visibility and choices of content options for the searchers to select.

While today you don’t have the ability to create a deep link, you can block specific URLs from becoming deep links. Both blocking features allow you to block either the specific deep links or the URLs from appearing in the organic search results for 90 days.

The block can be extended for an additional 90 days at any point in time, and there isn’t a limitation to the number of consecutive blocks placed on either deep links or blocked URLs.

Deep links can be blocked at either the URL or country/regional level, whereas URLs blocks can be created at the directory or specific URL level and can also be specified to block the cache so the cached pages are also blocked from appearing in the search results.

What you need to know about blocking URLs

- The best way to block URLs from appearing is to add a NOINDEX meta tag to the header of a page.

- Bingbot needs to be able to access and read the tags on a page including the NOINDEX tags, so it’s important to make sure your robots.txt file is not disallowing Bingbot to access the NOINDEX pages.

Disavow Links

Links to your site are viewed as a “vote” for the quality of your website. The disavow tool tells Bing you don’t trust and want to distance your website from inbound links from a specific domain or directory on a separate URL.

Both Bing and Google recommend that webmasters reach out to remove as many spammy and low-quality links to your site as possible before disavowing links. However, if you’ve exhausted all options and aren’t able to make headway on getting links removed, you can use the Disavow Links feature.

What you need to know about Bing Disavow Links

- This feature allows you to submit a page, directory or domain URL to be disavowed so that Bing does not take the specific links into account when assessing your site.

- This is an advanced feature, and it should be used with caution.

- Links that have been disavowed will still appear in the Inbound Link reports.

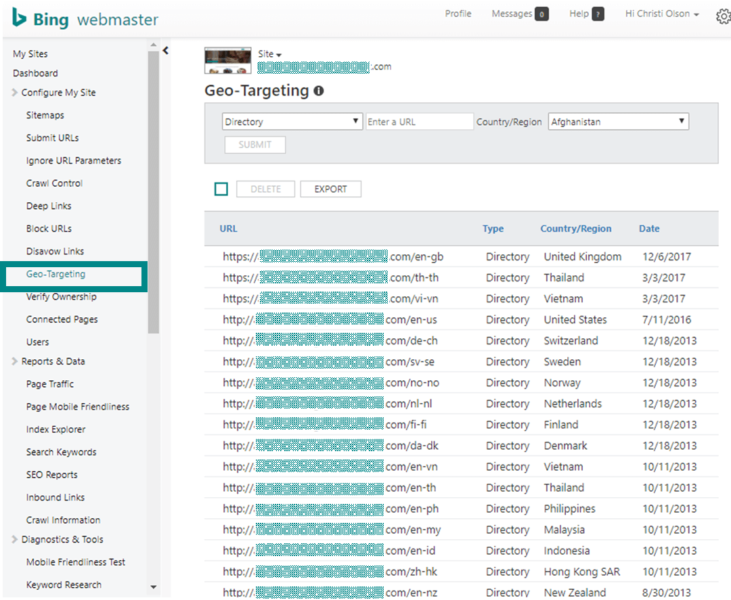

Geo-Targeting controls

The Geo-Targeting controls allow you to provide Bing with guidance on the intended audience of your website by connecting it with a specific country. It also allows you to define a country audience that can be applied at the domain, subdomain, directory, or even the page level for your website.

This geo-targeting feature helps provide additional signals to Bing and labels dedicated content to a specific country/market. This feature is intended for large-scale websites that have dedicated content built out for specific language and country targeting.

How to use this feature:

- Use either the domain/subdomain/directory/ or specific URL option to identify the country- or region-specific content.

- At Bing, we use the market-language code identifiers within a subfolder, so I would select bing.com/en-us as a directory type and associate it with the United States.

- For Canada, since it can be country- and language-specific, I would create this: bing.com/en-ca — and — bing.com/fr-a

Why I love this feature:

I love this feature and its flexibility. Way way back in the day when I was managing SEO for Windows at Microsoft, I would have to answer the question each quarter as to why our US dot-com site outranked our sites in Canada, the UK, and Australia on their respective country-specific search results. Now, this tool does it for me.

Verify Ownership

The Verify Ownership feature provides you with the three options for verifying that you own the website and should be able to access the data through webmaster tools.

Connected Pages

The Connected Pages feature allows you to associate websites with their corresponding social media accounts to track how many impressions and clicks you get for your brand presence. You can add your various social pages as a group of connected content as long as they contain a link back to your verified website.

How you can use this:

- This appears to be a relatively unknown feature, but one that can provide you with a more holistic view of the value of your web presence and digital brand on Bing.

- Once you’ve enabled your connected pages, you’ll be able to access Connected Pages dashboard. This dashboard contains Bing search click and impression data for each of your connected pages. It also contains the top keywords your connected page is ranking for and the top inbound links to your connected pages.

Users

The Users feature allows you to control who has access and the level of access they have to your webmaster tools account. You can add and delete users from here.

First, log in to your webmaster tools account. Select which site you want to provide access to in the My Sites Dashboard. Next, select Users in the “Configure My Site” menu. Add the email address and the access role type (read-only, read/write, admin) and click the “Add” button.

Section: Reports and Data

The reports and data section provides comprehensive reporting tools which include:

- Your page traffic.

- Search keywords.

- Mobile-friendliness report.

- An index explorer to view how Bing sees your site when crawled, which helps identify opportunities and issues.

- SEO reports based on standard best practices and recommendations.

All of the reports can be exported into an Excel file; many of them have additional views of performance data. Select either the “view search keywords” or “view served pages” links to open up a pop-up window with a more detailed view of the performance. Within the pop-up window, click the + symbol to expand and see granular performance data by rank.

Page Traffic

This is the overview of your top pages in terms of search impressions and clicks. By selecting the “View Search Keywords” option, you can dig in deeper to see keyword ranking performance data for the keyword and page combination by rank.

Search keywords

This is the overview of your top keywords in terms of search impressions and clicks. Similar to the top pages report, you can dig deep to get the list of URLs ranking for a given keyword and dig even deeper to see the performance of each URL by the ranking performance data for the specific page and keyword combination.

The search keywords report is a good tool to understand how your site is performing for each set of keywords by click-through rate.

Savvy search marketers can use this data to get a view of the average click-through rate by position within the SERP so you can understand what the potential increase in traffic and revenue could be. This will help make a case for SEO investments to improve your site rankings and ratings.

Inbound Links

This is the report showing inbound links to your website over time, along with a breakdown of the pages within your site the links are pointing to.

You should use this report to see if you are gaining or losing links over time. Clicking on the link count for a given URL will provide a comprehensive inbound link report, along with the URL of the linking website and the anchor text used.

You can export the inbound link report to get a better understanding of your link profile. Using Excel, I take the source URLs and strip them down to the root domain level and then look at my link profile for diversity and to see if it looks like a “natural” link portfolio.

Here are the criteria I use when looking for “natural” inbound links:

- Diversity in the anchor text.

- Diversity in the sites linking to you.

- Diversity in the types of sites linking to you.

- Diversity in where the sites are linking to you.

Once I get an idea where my backlinks are coming from and the anchors being used, I use a tool like Open Site Explorer, Majestic Site Explorer or SEMrush Backlink Management Tool to get more details about my inbound links and the sites they are from. I look at the number of links using nofollow attributes and link freshness, and I see if there are spammy links I should be disavowing.

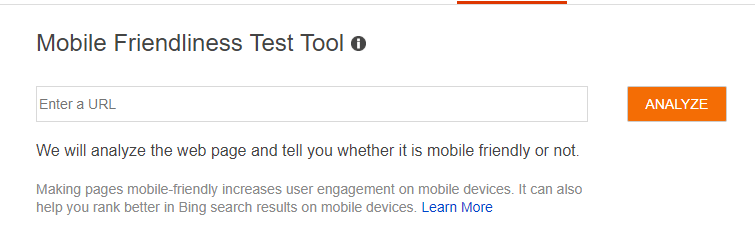

Mobile Friendliness

This is a tool can be used in BWT or accessed from a direct link. It will analyze a web page and tell you if it’s mobile-friendly or not. Here is the standalone tool:

Click Analyze to get a breakdown of the factors that Bing uses to determine mobile-friendliness and exactly which factors need improvement.

Here are some of the main factors Bing uses to determine if a page is mobile-friendly:

- Viewport configuration.

- Zoom configuration.

- Content width.

- Readability of text.

- The spacing of links and other content.

Crawl information

This is the status of your URLs from Bingbots last crawl and the issues Bingbot encountered while crawling your website.

By clicking on the number underneath the error alert, you’ll get a comprehensive list of the URLs returning a particular status or error code. This is one of the tools that flag if and when Bing is having difficulty accessing your site. It’s also the tool I recommend folks go to when they reach out to me personally to ask me why their site isn’t indexing for a given page.

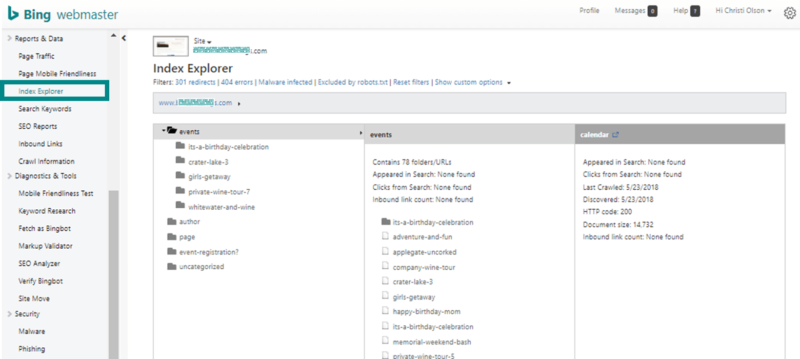

Index Explorer

Index Explorer is a unique tool showing you exactly how Bing sees your website. It reflects all of the URLs we’ve seen for your website, including redirects, broken links and URLs blocked by robots.txt.

It provides you with data for a specific section of your website, including:

- How many URLs have been discovered?

- How frequently they’ve appeared in search.

- How many clicks they’ve received.

- The inbound link counts.

This information can help you get super-granular and understand how Bing sees your website so you can discover and uncover issues and opportunities for your organic search optimization strategies.

One tip I’d highly recommend is to start with a smaller website so you get the feel for how Index Explorer works. I attempted to dig in and learn the tool with Microsoft.com’s website, and the site structure is so complex that I felt overwhelmed.

I took a step back and started with a much simpler website and was able to learn the ins and outs of Index Explorer. I’m going to follow up with a more in-depth post about Index Explorer later in this series so you get a very detailed look at this terrific tool. Once you master it, it will provide you with a lot of valuable information.

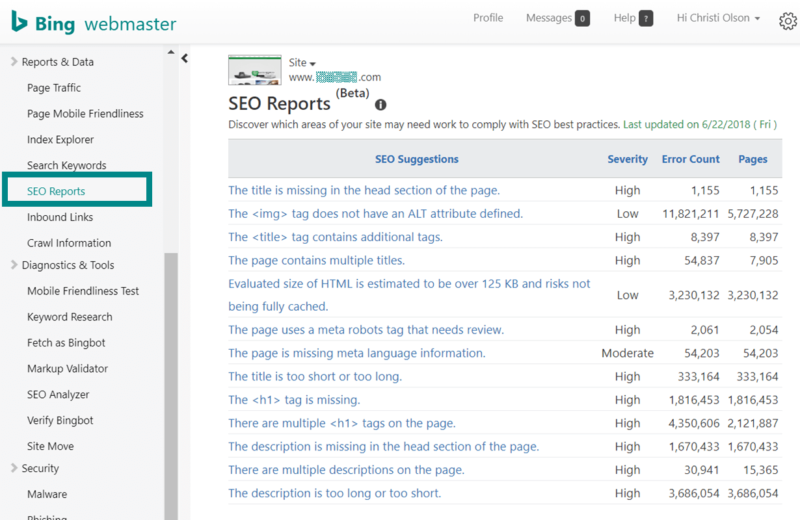

SEO Reports

The SEO Reports tool discovers which areas of your site may need work to conform to SEO best practices. The tool is free and is included in BWT:

The reports are generated and updated every other week and are based on SEO Best Practices to help you get started in some of the most common page-level optimization recommendations. Click on the SEO Suggestion to get an aggregated view of the counts of all of the issues found across your entire site and a list of the URLs that are not in compliance with the recommendation.

If you want to check out a specific URL, use the SEO Analyzer in the Diagnostics and Tools section of Bing Webmaster Tools.

I want to point out three things found in the SEO Reports and the SEO Analyzer tool which I find invaluable when optimizing sites. And did I mention they were free to use?

- Learn the basics of on-page optimization. I cut my SEO teeth on the SEO Reports tool set back in the mid-2000’s when Duane Forrester was creating them for our internal team to use.

- The report format makes it easy to identify, investigate and understand what you can do to make your site more discoverable and SEO-friendly to any search engine.

- You can copy and paste the performance data and track the progress you are (or are not) making against fixing high-priority issues on your site.

No comments